Loading packages in pyspark jupyter notebook/lab

Using spark in an interactive way it's a bit cumbersome sometimes if you don't want to go to the good old terminal and you decide something like a jupyter notebook better suits you. Or you are doing a more complex analysis also.

If that is the case and you don't want the trouble of setting-up the infrastructure provided by a cloud provider, and you are just using spark because you are used to it, want to try it or scales well, then using pyspark it's great due to the seamless integration with data science and visualization tools.

Just install the package from pip, and you are ready to go!

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.master("local[*]") \

.appName("myApp") \

.config("spark.driver.memory", "32g") \

.getOrCreate()You can find some problems however if you want to use those extra utilities coming from the spark packages. Reading from avro, kafka... using graph frames or anything you can imagine it's easy if you use the command line to start-up a shell or submitting a package. Just write --package and are good to go.

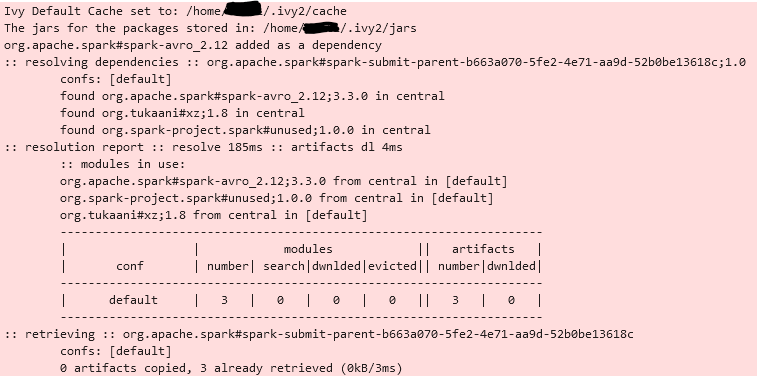

However, if you want to do it from a jupyter instance there are some solutions not working well. The easiest one is not well documented, but it works like a charm. Just add an extra config to your spark session builder ( spark.jars.packages ) and restart the kernel.

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.master("local[*]") \

.appName("myapp") \

.config("spark.jars.packages", "org.apache.spark:spark-avro_2.12:3.3.0,org.package2....")\

.config("spark.driver.memory", "32g") \

.getOrCreate()

That's it. as easy as it gets!