Configure Nginx as reverse proxy for SparkUi

Nginx is a common webserver to be used as reverse proxy for things like adding TLS, basic authentication and forwarding the requests to other internal servers on your network. In this case, we are going to serve the sparkUI adding security (https) and authentication, and serving it in a different location: myserver.com/spark

Prerequisites

- Have spark installed somewhere. For our test set-up, we will have it installed in the same server as nginx.

- Nginx knowledge

- Nginx installed with the

http_sub_moduleactivated. This is usually activated by default. You can check-it-out doingsudo nginx -V | grep http_sub_module

Set-up the basic authentication (optional)

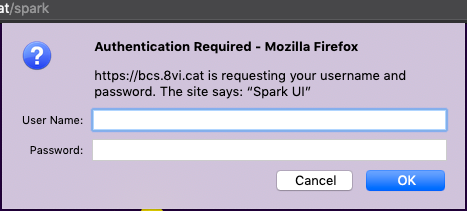

This step is straight forward: create a file with the user and passwords and then add that file to the location block in the site config file. You can find a more detailed guide in nginx page.

#Add '-c' option if the file does not exist (just once)

# Execute for each user

$ sudo htpasswd -b /etc/nginx/htpasswd User Password

Then, you will need to add the following content when you want to use the authentication:

auth_basic "Put here any message you wish";

auth_basic_user_file htpasswd;

Spark UI is not prepared for reverse proxies

When trying to set-up this, at first I thought that would be pretty easy. If it is like other services, just adding a parameter to tell the URL the user will see would be enough.

Looking through the documentation, I found something I thought could do the trick: spark.ui.reverseProxy and spark.ui.reverseProxyUrl . Promising!

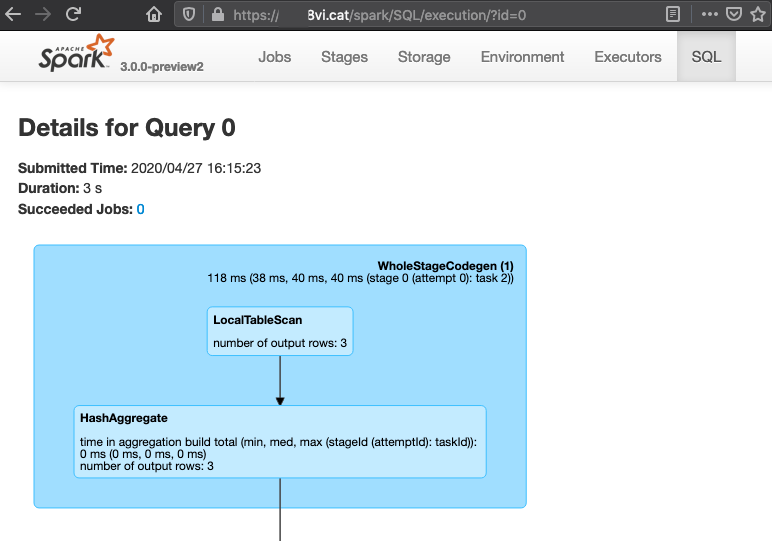

I tried that, and thought, hey, it seems to work. We get the following message:

$ spark/bin/spark-shell --conf='spark.ui.reverseProxy=true' --conf='spark.ui.reverseProxyUrl=https://8vi.cat/spark'

...

Spark Context Web UI is available at https://8vi.cat/spark/proxy/local-1587994999941

It is not amazing: we got a random value for each application and i could not get Nginx to forward my connection to spark UI. Furthermore, I have to remember to add those configurations every time I run spark, so it is not the best option IMO.

Let's investigate a little bit more. I came across this 3 year old improvement proposal (SPARK-20044) that it looked like something similar to what I try to achieve.

Purpose: allow to run the Spark web UI behind a reverse proxy with URLs prefixed by a context root, like www.mydomain.com/spark. In particular, this allows to access multiple Spark clusters through the same virtual host, only distinguishing them by context root, like www.mydomain.com/cluster1, www.mydomain.com/cluster2, and it allows to run the Spark UI in a common cookie domain (for SSO) with other services.

This looks promising. There is a PR for that and oh, it never got merged into the open source spark :( Not everything is lost, it points out how we can set-up the nginx configuration to be able to show our UI.

server {

server_name abc.xyj;

# spark ui configuration

set $SPARK_MASTER http://127.0.0.1:4040;

# redirect master UI path without terminating slash,

# so that relative URLs are resolved correctly

location ~ ^(?<prefix>/spark$) {

return 302 $scheme://$host:$server_port$prefix/;

}

# split spark UI path into prefix and local path within master UI

location ~ ^(?<prefix>/spark)(?<local_path>/.*) {

# Set authentication

auth_basic "Spark UI";

auth_basic_user_file htpasswd;

# Modify html payloads to redirect links to the correct subfoler

proxy_set_header Accept-Encoding "";

sub_filter_types *;

sub_filter 'href="/' 'href="/spark/';

sub_filter 'src="/' 'src="/spark/';

sub_filter_once off;

# strip prefix when forwarding request

rewrite ^ $local_path break;

# forward to spark master UI

proxy_pass $SPARK_MASTER;

# fix host (implicit) and add prefix on redirects

proxy_redirect $SPARK_MASTER $prefix;

}

more things here

}

Here we use the sub_filter to rewrite the http content sparkUI sends to the browser, adding-in our extra location: /spark

And it works, as you can see in this post's image!

![Leptos: rust full stack [Code + Slides + Video]](/content/images/size/w600/2025/12/leptos-talk.png)